Linking Google Analytics

Analytics can be used to enrich the records you extract from a website. By boosting search results based on their popularity (or another metric tracked by Google Analytics), you can improve the relevance of your website’s search.

With a little bit of configuration, your crawler can fetch Google Analytics metrics automatically and regularly.

Here’s a brief overview of the required steps:

- Have the administrator of your Google Analytics account provide read access to the email address of the Google Account you want to use (personal account or service account).

- Create a new External Data source on the Crawler interface.

- Verify that your crawler can connect to your Google Analytics view.

- Add your External Data unique name to the

externalDataproperty. - Edit your crawler’s

recordExtractorso that it integrates the metrics retrieved from Google Analytics into the output records (make sure this works as expected).

Optional: Create a service account

If you don’t want to link a personal account, you can create a service account on Google Cloud Platform.

- Create (or select an existing) project from console.developers.google.com.

- Activate the Analytics Reporting API in that project.

- In the Credentials section, create a new Service account. You can skip the optional steps.

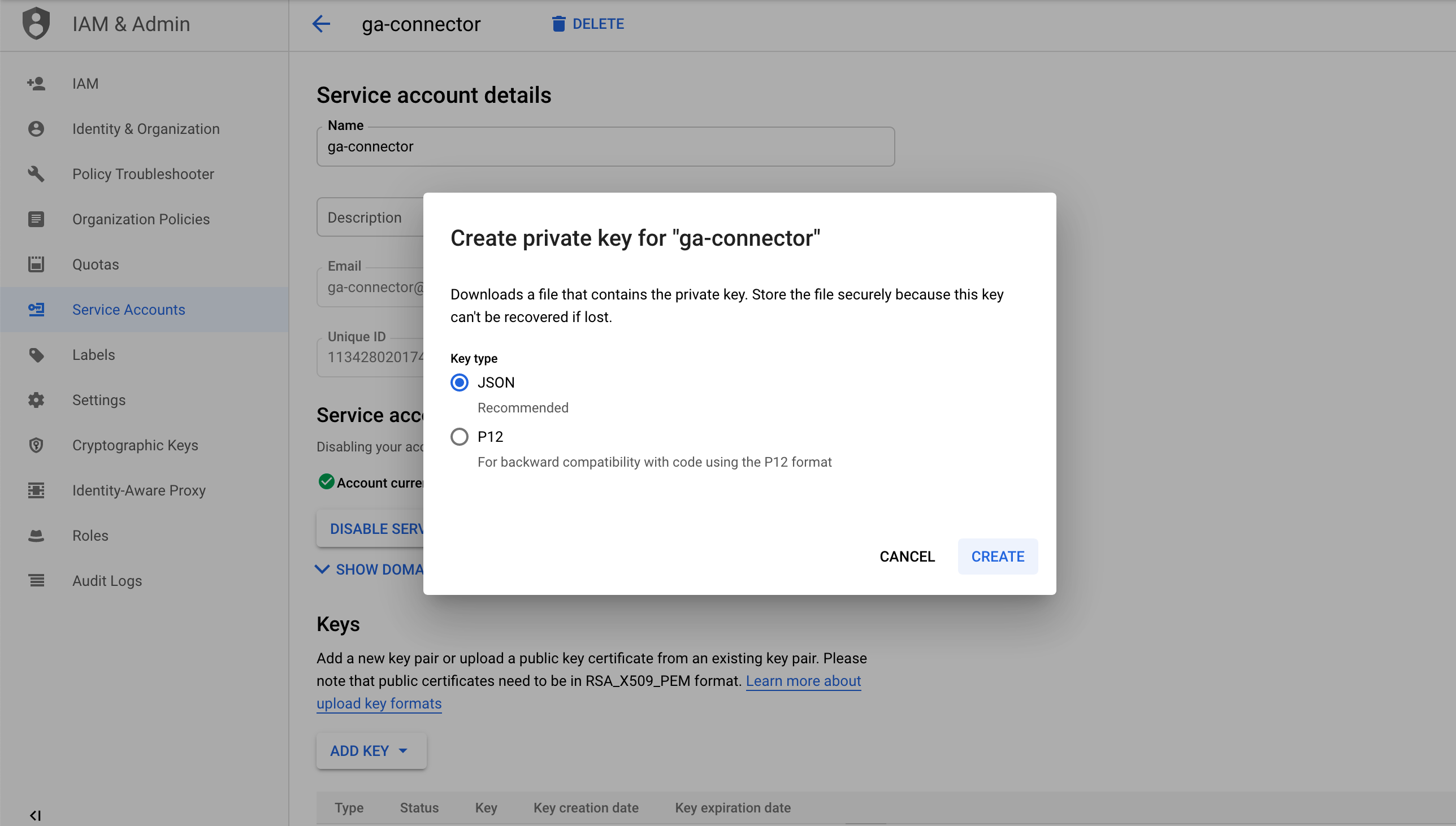

- Open the Service account and click on Add key -> Create new key. Select JSON, and download the resulting JSON file (you need this file in step 3).

Grant access to Google Analytics data

This first step shows how an administrator of a Google Analytics account can give read access to your Google Account or a service account.

Steps for an administrator:

- Log in to Google Analytics.

- Select Account, Property, and then the View that contains the analytics for the website you are crawling.

- Go to the Admin tab.

- In the View panel (on the right side of the screen), click on View User Management.

- Click the + button, then click “Add users”.

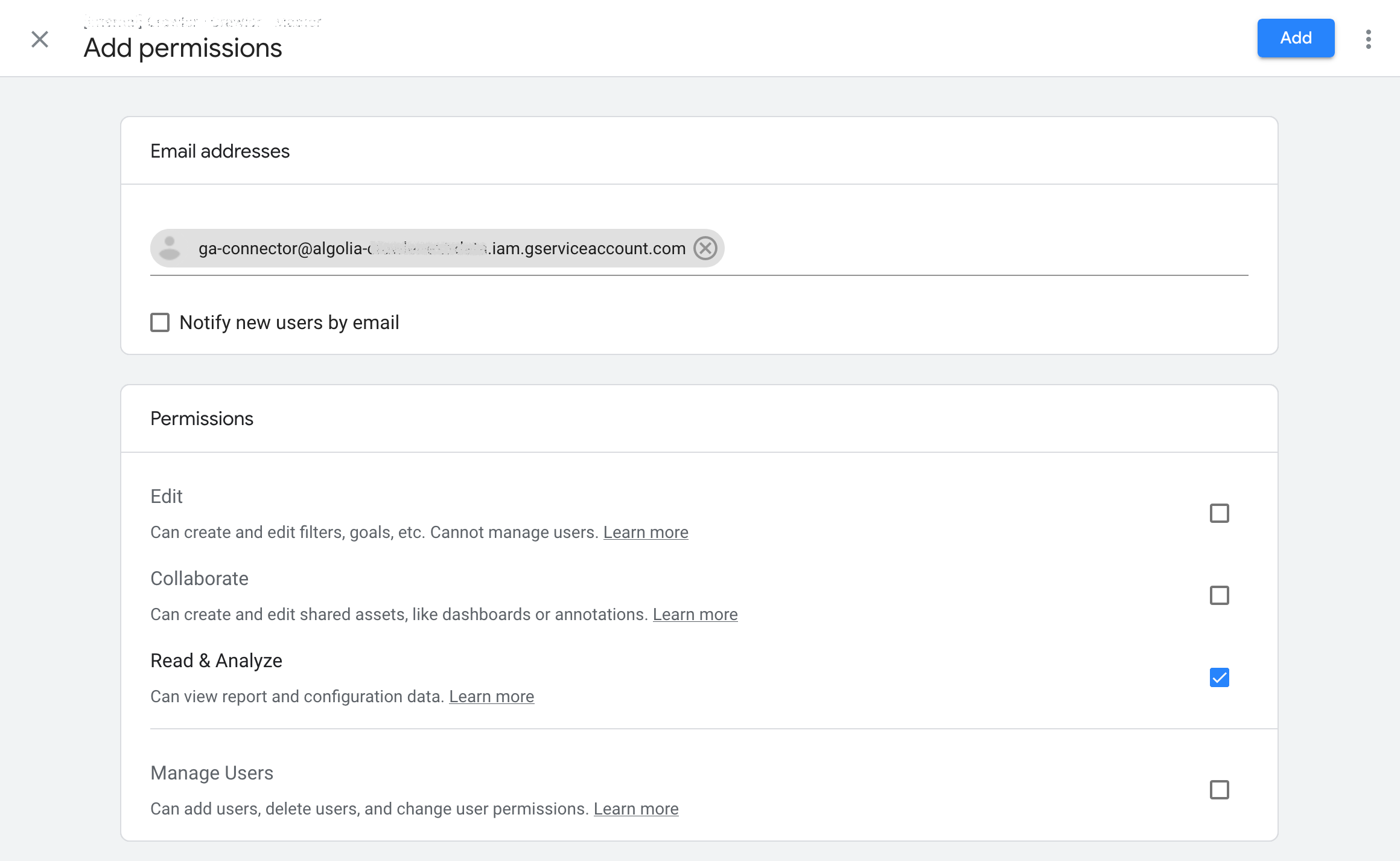

- Paste the email address of the Google Account you want to add.

- In the Permissions panel, make sure that only the “Read & Analyze” permission is enabled.

- Click the “Add” button to confirm.

Create the External Data

- Open the Crawler interface, and navigate to the External Data tab.

- Click on the button New External Data source

- Fill in the form:

- App ID: Select the Application ID you want to associate the External Data with.

- Unique name: The name use to reference to this External Data in your crawler’s configuration (it’s unique in the scope of your selected App ID).

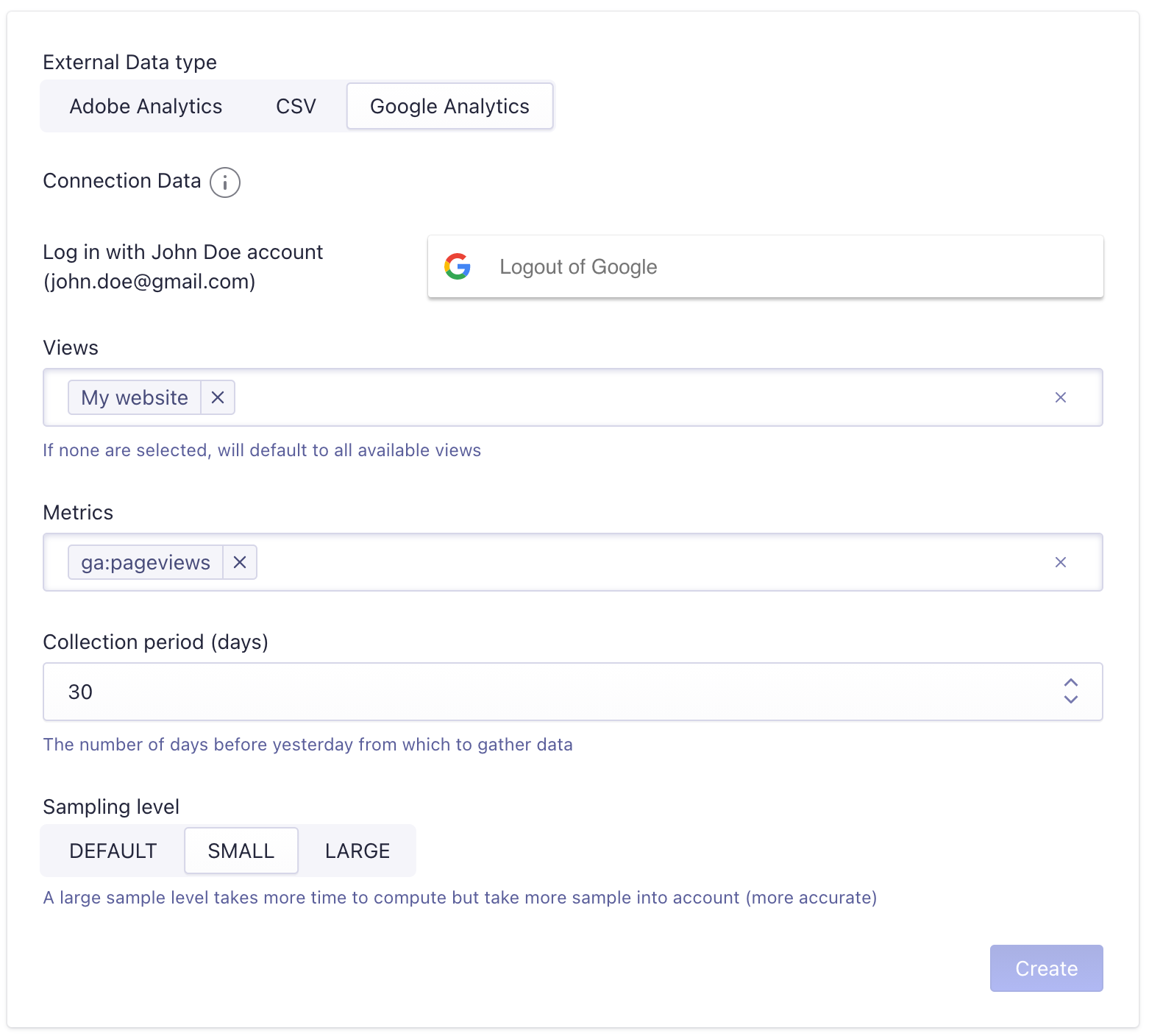

- External Data type: Select Google Analytics.

- Connection Data: Authenticate with Google OAuth by clicking on Sign in with Google.

- Note: If you want to use a service account you can click on the ‘Sign in with email and private key instead’ button.

- Views: Select 0 or more views to fetch data from. By default all available views are selected.

- Metrics: Select one or more metrics to be fetched.

- Collection period: The number of days before yesterday from which to gather data.

- Sampling level: The level of precision of your analytics data (A large sample level takes more time to compute but take more sample into account (more precise)).

Test your External Data

In this step, you will check that the Crawler is able to connect to your Google Analytics view.

- Return to the External Data tab.

- Click on the looking glass on the right of your External Data to go to the Explore Data page.

- Trigger a manual refresh with the Refresh Data button and verify that the extraction is successful by checking the Status on the rightmost column, if it’s green it’s all good.

- If an error appears, check your credentials if you choose to connect with a service account.

Update your crawler’s configuration

In this step, edit the configuration of your crawler to use the External Data you just created.

- Go to your Crawler dashboard, select your crawler, and click on the Editor tab.

- Add the unique name of the External Data to your

externalDataproperty:externalData: ['myGoogleAnalyticsData']

- Save your changes.

After saving your crawler’s configuration, your Google Analytics metrics will be ready whenever you crawl your website. Please note that you must crawl your website at least once before the metrics appear. If an error occurs while fetching your analytics, it should be reported in less than one minute after the crawling process starts.

Integrate analytics into records

In this step, you edit your recordExtractor so that it integrates metrics from Google Analytics into the records it produces.

- Go to your Crawler Admin, select your crawler, and go to the Editor tab.

- Read metric values from the external data source you’ve added beforehand, and store them as attributes for your resulting records. If Google Analytics has data for the current page, the associated metrics should be present in the

dataSourcesparameter of yourrecordExtractor:Copy1 2 3 4 5 6 7 8 9 10 11 12 13 14

{ // ... recordExtractor: ({ url, dataSources }) => { // "myAnalytics" must match one of the unique name defined in `externalData` const pageviews = dataSources.myAnalytics['ga:uniquePageViews']; return [ { objectID: url.href, pageviews, }, ]; }, };

For a complete example, check the

recordExtractorof the sample Google Analytics crawler configuration. - In the Test a URL field of the configuration editor (which you can find in the Admin > Settings tab), type the URL of a page with analytics attached to it.

- Click on Run test.

- When the test completes, click the External data tab. You should see the analytics data extracted from Google Analytics for that page.

If this doesn’t work as expected, try adding a trailing / to your URL, or test with another URL.